-

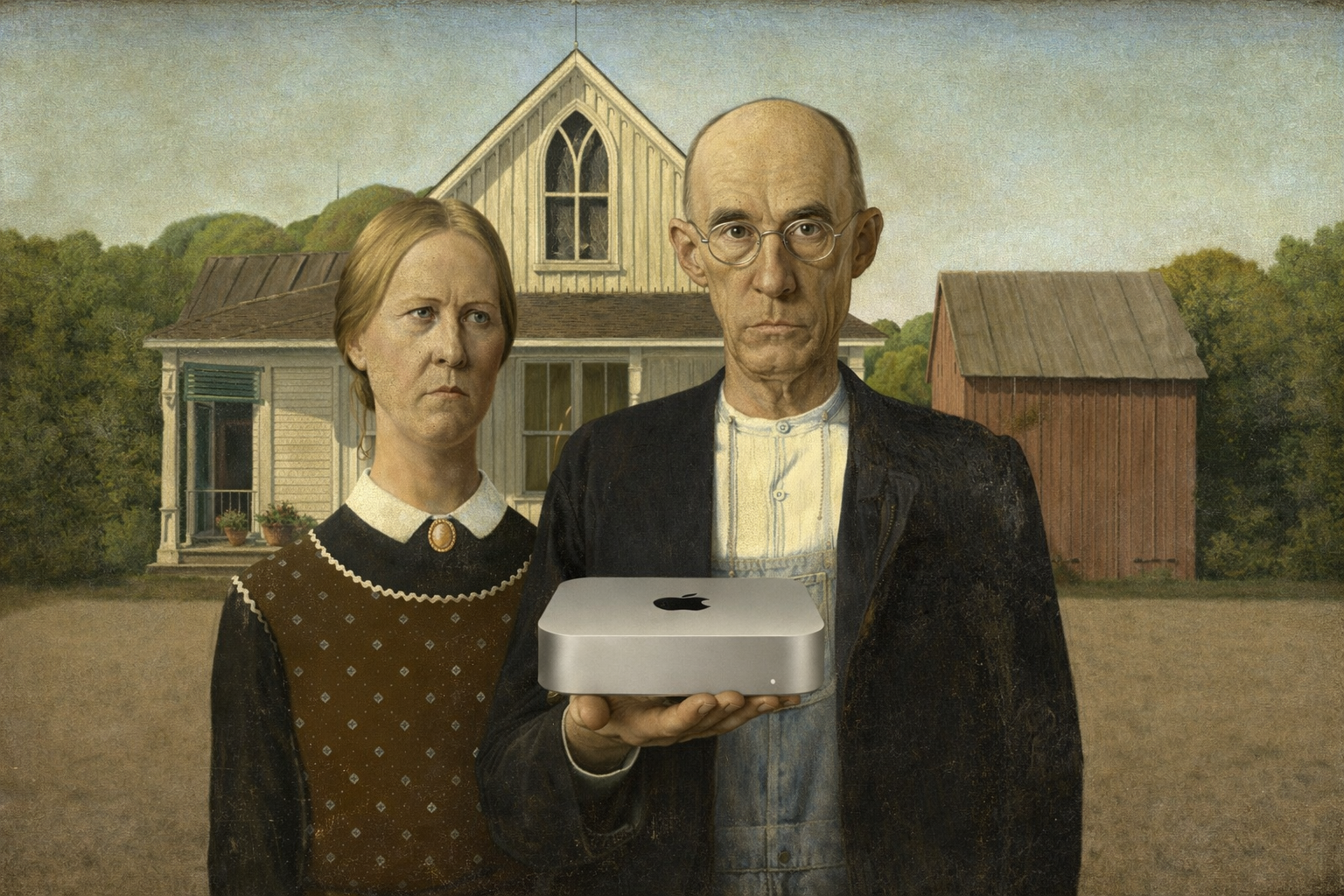

The Laptop Rule and the White-Collar Delusion

Shane Legg’s “Laptop Rule” cuts straight to the weak spot of modern white-collar work: if it lives entirely on a screen, AI can probably learn it.

-

The Hidden Cost of AI Speed: Why “More Output” Feels Like Burnout

AI boosts output but shifts the burden to human judgment. Here’s why that exhausts us—and practical ways to regain control.

-

COBOL Was Never the Product. The Toll Booth Was.

Anthropic’s COBOL pitch is not about old code. It threatens the costly consulting bottleneck around banks, insurers, and government legacy systems.

-

When Markets React to Perceived Disruption: Claude Code Security and the Cybersecurity Sector

Anthropic’s Claude Code Security announcement triggered a sharp drop in cybersecurity stocks, highlighting investor fears about AI-driven disruption.

-

Distillation attacks on large language models: motives, actors and defences

The essay explores unauthorized AI model distillation, profiling actors like DeepSeek and examining motivations such as cost reduction and performance cloning, while reviewing defense measures by major AI companies.

gekko